FingerSpell:

Translating ASL Hand Signs Into Synthesized Speech

Faculty Advisor:

Tony Scarlatos

Lecturer, Computer Science

SUNY at Stony Brook

Programmer:

Dmitri Nesterenko

FingerSpell:

|

|

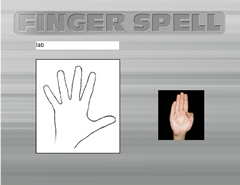

FingerSpell is a physical computing application that translates hand gestures into synthesized speech. Its purpose is to facilitate communication between users of the American Sign Language (ASL) alphabet and English speakers who do not know ASL. The hardware interface is a custom-built data glove of bend and touch sensors that is lightweight and comfortable. Analog signals from the glove are converted to MIDI channel messages read by the computer. Gesture input from the glove is processed and if the gesture belongs to the ASL alphabet the letter corresponding to it is added to the output. The software responds to recognized gestures with both visual and auditory feedback. The recognized letters are output to a text field and graphics of the ASL letters are displayed. Once the user gestures that a word is finished, the word in the output field is pronounced through the use of synthesized speech. An embedded database of 10,000 common English words is used for field completion. Like the address field of a web browser, the application attempts to complete words for the signer based on the current content of the field. In this way many words can be signed with only a few gestures, expediting communication. The FingerSpell application is written in Macromedia Director MX’s scripting language, Lingo. An extension to Director, XMidi, enables Director MX to receive and process MIDI channel messages in real time. The prototype runs on either Mac OSX or Windows XP platforms. This project was presented at the CIT conference at Stony Brook (June 2004), and a paper about the project will be published in The Journal of Educational Technology Systems this spring. This work is supported by an NSF CRCD grant (#0203333). |